Each block has a unique hash value that references the storage root. In a full mode, which has access to the most recent 128 blocks, there are 128 isolated state tries (in reality, it is slightly more complicated than this). When the EVM needs to access the account state, it uses the block number to find the proper root hash, then uses the root hash to determine which trie to query, and finally traverses down the branches to identify the correct leaf containing the account information.

## Merkle proof

Now, here's the question: we have account information (`storageRoot`, `codeHash`, balance, and `nonce`); how do we verify this information’s authenticity and correctness? The answer is by using the storage root hash.

For Ethereum, the final root hash is derived by hashing every layer of the trie.

Each block has a unique hash value that references the storage root. In a full mode, which has access to the most recent 128 blocks, there are 128 isolated state tries (in reality, it is slightly more complicated than this). When the EVM needs to access the account state, it uses the block number to find the proper root hash, then uses the root hash to determine which trie to query, and finally traverses down the branches to identify the correct leaf containing the account information.

## Merkle proof

Now, here's the question: we have account information (`storageRoot`, `codeHash`, balance, and `nonce`); how do we verify this information’s authenticity and correctness? The answer is by using the storage root hash.

For Ethereum, the final root hash is derived by hashing every layer of the trie.

[Merkle proofs for offline data integrity](https://ethereum.org/en/developers/tutorials/merkle-proofs-for-offline-data-integrity/)

[Merkle proofs for offline data integrity](https://ethereum.org/en/developers/tutorials/merkle-proofs-for-offline-data-integrity/)

[Merkle proofs for offline data integrity](https://ethereum.org/en/developers/tutorials/merkle-proofs-for-offline-data-integrity/)

If the value of `hashEFGH`, `hashAB`, and `hashD` are known:

[Merkle proofs for offline data integrity](https://ethereum.org/en/developers/tutorials/merkle-proofs-for-offline-data-integrity/)

If the value of `hashEFGH`, `hashAB`, and `hashD` are known:

To begin, we retrieve the latest block information by calling `w3.eth.get_block("latest")`.

Next, we obtain the Merkle proof of the account `0xdAC17F958D2ee523a2206206994597C13D831ec7` and one of its storage values by calling `w3.eth.get_proof('0xdAC17F958D2ee523a2206206994597C13D831ec7', ["0x9c7fca54b386399991ce2d6f6fbfc3879e4204c469d179ec0bba12523ed3d44c"], "latest")`.

Then, we verify the proof by calling `verify_eth_get_proof(proof, block.stateRoot)`. If the proof is valid, `verify_eth_get_proof` returns `true` to the `isValidProof` variable. Otherwise, it returns a "Failed to verify account proof" error.

To begin, we retrieve the latest block information by calling `w3.eth.get_block("latest")`.

Next, we obtain the Merkle proof of the account `0xdAC17F958D2ee523a2206206994597C13D831ec7` and one of its storage values by calling `w3.eth.get_proof('0xdAC17F958D2ee523a2206206994597C13D831ec7', ["0x9c7fca54b386399991ce2d6f6fbfc3879e4204c469d179ec0bba12523ed3d44c"], "latest")`.

Then, we verify the proof by calling `verify_eth_get_proof(proof, block.stateRoot)`. If the proof is valid, `verify_eth_get_proof` returns `true` to the `isValidProof` variable. Otherwise, it returns a "Failed to verify account proof" error.

## Q\&A

## Q\&A

And gas reports:

And gas reports:

Apparently `joinGame` is our most expensive function.

## Conclusion

We're finally done! We've built a complete game of incomplete information on a public blockchain. While we've built a game for recreation, these concepts could easily be applied to other ideas and projects. For example, we could create an anonymous NFT marketplace, where the owners of NFTs remain private, but they can verify their identity and sign off on bids.

### Further reading

Apparently `joinGame` is our most expensive function.

## Conclusion

We're finally done! We've built a complete game of incomplete information on a public blockchain. While we've built a game for recreation, these concepts could easily be applied to other ideas and projects. For example, we could create an anonymous NFT marketplace, where the owners of NFTs remain private, but they can verify their identity and sign off on bids.

### Further reading

In the section **Access and credentials** of a Chainstack Node, press **Add to MetaMask**. This will prompt you to confirm a new network details.

To add a network manually, go to **Networks** and add a new network with a required chain ID and your Chainstack RPC endpoint.

### Trust Wallet

In the section **Access and credentials** of a Chainstack Node, press **Add to MetaMask**. This will prompt you to confirm a new network details.

To add a network manually, go to **Networks** and add a new network with a required chain ID and your Chainstack RPC endpoint.

### Trust Wallet

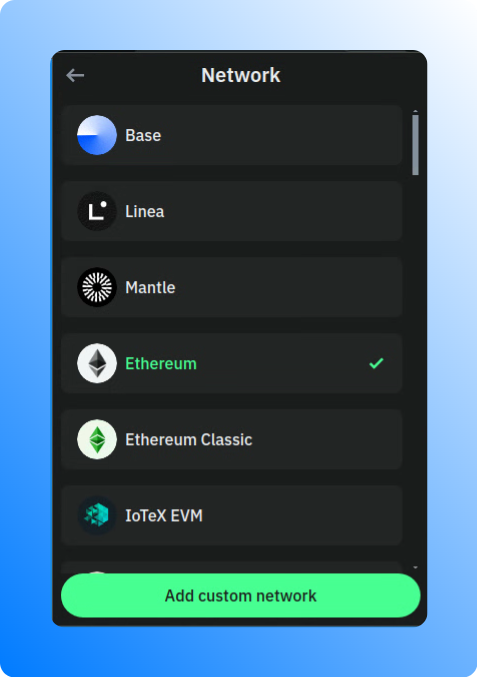

To add a custom RPC to Trust Wallet, open the wallet and navigate to the **Settings** section. Look for the **Network** section to add a custom network with your Chainstack RPC endpoint.

### Rainbow

To add a custom RPC to Trust Wallet, open the wallet and navigate to the **Settings** section. Look for the **Network** section to add a custom network with your Chainstack RPC endpoint.

### Rainbow

To add a custom RPC to Rainbow Wallet, open the wallet and navigate to the **Settings** section. Look for the **Networks** to add your Chainstack RPC endpoint.

### Rabby

To add a custom RPC to Rainbow Wallet, open the wallet and navigate to the **Settings** section. Look for the **Networks** to add your Chainstack RPC endpoint.

### Rabby

To add a custom RPC to Rabby Wallet, open the wallet and navigate to the **Settings (More)** section. Look for the **Modify RPC URL** to add your Chainstack RPC endpoint.

### Frame Desktop

To add a custom RPC to Rabby Wallet, open the wallet and navigate to the **Settings (More)** section. Look for the **Modify RPC URL** to add your Chainstack RPC endpoint.

### Frame Desktop

To add a custom RPC to Frame Desktop, open the wallet and navigate to the **Chains** section. Click on the chain details to add your Chainstack RPC endpoint.

## IDEs

Cloud-based IDEs provide the flexibility to use injected providers. MetaMask is the most commonly used one. By adding a Chainstack RPC node in MetaMask and connecting to the wallet in your IDE, you can seamlessly interact with the network throught a Chainstack node.

### Remix IDE

To enable Remix IDE to interact with the network through a Chainstack node, you can follow these steps:

1. Install and set up MetaMask to use a Chainstack node for interaction. You can refer to the guide on Interacting through MetaMask for detailed instructions.

2. Open Remix IDE and go to the Deploy tab (or Deploy and run transactions tab). Here, select **Injected Provider - MetaMask** as your [environment](https://remix-ide.readthedocs.io/en/latest/run.html#environment).

### ChainIDE

To enable Remix IDE to interact with the network through a Chainstack node, you can follow these steps:

1. Install and set up MetaMask to use a Chainstack node for interaction. You can refer to the guide on Interacting through MetaMask for detailed instructions.

2. Open ChainIDE and navigate the [Connect Wallet](https://chainide.gitbook.io/chainide-english-1/ethereum-ide-1/1.-ethereum-ide/quick-demo#id-3.-connect-to-ethereum-evm) button. Here, select **Injected Web3 Provider** and then **MetaMask**.

## Programming languages and libraries

### Communication protocols

WebSockets and HTTP are essential communication protocols in web applications. WebSockets enable two-way, persistent communication between a client and a server, useful for real-time price feeds, live transaction monitoring, and event notifications. In contrast, HTTP follows a one-way, request-response model, ideal for retrieving periodic price updates and transaction histories.

### web3.js

Build DApps using [web3.js](https://github.com/web3/web3.js) and Ethereum nodes deployed with Chainstack.

To add a custom RPC to Frame Desktop, open the wallet and navigate to the **Chains** section. Click on the chain details to add your Chainstack RPC endpoint.

## IDEs

Cloud-based IDEs provide the flexibility to use injected providers. MetaMask is the most commonly used one. By adding a Chainstack RPC node in MetaMask and connecting to the wallet in your IDE, you can seamlessly interact with the network throught a Chainstack node.

### Remix IDE

To enable Remix IDE to interact with the network through a Chainstack node, you can follow these steps:

1. Install and set up MetaMask to use a Chainstack node for interaction. You can refer to the guide on Interacting through MetaMask for detailed instructions.

2. Open Remix IDE and go to the Deploy tab (or Deploy and run transactions tab). Here, select **Injected Provider - MetaMask** as your [environment](https://remix-ide.readthedocs.io/en/latest/run.html#environment).

### ChainIDE

To enable Remix IDE to interact with the network through a Chainstack node, you can follow these steps:

1. Install and set up MetaMask to use a Chainstack node for interaction. You can refer to the guide on Interacting through MetaMask for detailed instructions.

2. Open ChainIDE and navigate the [Connect Wallet](https://chainide.gitbook.io/chainide-english-1/ethereum-ide-1/1.-ethereum-ide/quick-demo#id-3.-connect-to-ethereum-evm) button. Here, select **Injected Web3 Provider** and then **MetaMask**.

## Programming languages and libraries

### Communication protocols

WebSockets and HTTP are essential communication protocols in web applications. WebSockets enable two-way, persistent communication between a client and a server, useful for real-time price feeds, live transaction monitoring, and event notifications. In contrast, HTTP follows a one-way, request-response model, ideal for retrieving periodic price updates and transaction histories.

### web3.js

Build DApps using [web3.js](https://github.com/web3/web3.js) and Ethereum nodes deployed with Chainstack.

Chainstack Powered

Multi-chain Wallet Balance Aggregator

This simple DApp uses the ChainstackProvider from ether.js

to interact with those chains.

Learn more about the ChainstackProvider from ether.js on

the{" "}

Chainstack documentation

.

{error}

} {balances && (Ethereum:{" "} {parseFloat(balances.ethereum).toFixed(5)} ETH

Polygon:{" "} {parseFloat(balances.polygon).toFixed(5)} MATIC

BNB Smart Chain:{" "} {parseFloat(balances.bnb).toFixed(5)} BNB

Arbitrum:{" "} {parseFloat(balances.arbitrum).toFixed(5)} ETH

Chainstack Powered

Multi-chain Wallet Balance Aggregator

This simple DApp uses the ChainstackProvider from ether.js

to interact with those chains.

Learn more about the ChainstackProvider from ether.js on

the{" "}

Chainstack documentation

.

{error}

} {balances && (Ethereum:{" "} {parseFloat(balances.ethereum).toFixed(5)} ETH

Polygon:{" "} {parseFloat(balances.polygon).toFixed(5)} MATIC

BNB Smart Chain:{" "} {parseFloat(balances.bnb).toFixed(5)} BNB

Arbitrum:{" "} {parseFloat(balances.arbitrum).toFixed(5)} ETH

For developers interested in the technical implementation and contribution to the project, please refer to our [GitHub repository](https://github.com/chainstacklabs/chainstack-rpc-dashboard-functions), which contains:

1. Complete technical documentation

2. Setup instructions

3. Configuration guidelines

## FAQ

For developers interested in the technical implementation and contribution to the project, please refer to our [GitHub repository](https://github.com/chainstacklabs/chainstack-rpc-dashboard-functions), which contains:

1. Complete technical documentation

2. Setup instructions

3. Configuration guidelines

## FAQ

### Visualise ERC-20 balance changes over time

The next example we’ll work on will use the same `erc20_balances` dataset used in one of the previous examples. This time, we’ll fetch and visualize how much [WETH](https://etherscan.io/token/0xc02aaa39b223fe8d0a0e5c4f27ead9083c756cc2) is in the[`WETH-USDT` pool](https://etherscan.io/address/0x0d4a11d5EEaaC28EC3F61d100daF4d40471f185) from Uniswap V2.

In a new file, paste the following code:

### Visualise ERC-20 balance changes over time

The next example we’ll work on will use the same `erc20_balances` dataset used in one of the previous examples. This time, we’ll fetch and visualize how much [WETH](https://etherscan.io/token/0xc02aaa39b223fe8d0a0e5c4f27ead9083c756cc2) is in the[`WETH-USDT` pool](https://etherscan.io/address/0x0d4a11d5EEaaC28EC3F61d100daF4d40471f185) from Uniswap V2.

In a new file, paste the following code:

As you can see, we can use `cryo` to fetch data and manipulate it with Python, a very powerful combo.

## Conclusion

The integration of `cryo` with Python is a significant advancement for blockchain data analysis. It combines `cryo`'s efficient data extraction capabilities with Python's powerful data processing and visualization tools. This synergy, coupled with high-performance Chainstack Global Nodes, enables users to easily extract, analyze, and visualize blockchain data, making it an invaluable resource for developers, researchers, and enthusiasts in the blockchain community. The practical examples demonstrate this integration's real-world utility, highlighting its potential to yield insightful and actionable information from complex blockchain datasets. In essence, `cryo` and Python offer an effective and accessible platform for in-depth blockchain data exploration.

### About the author

As you can see, we can use `cryo` to fetch data and manipulate it with Python, a very powerful combo.

## Conclusion

The integration of `cryo` with Python is a significant advancement for blockchain data analysis. It combines `cryo`'s efficient data extraction capabilities with Python's powerful data processing and visualization tools. This synergy, coupled with high-performance Chainstack Global Nodes, enables users to easily extract, analyze, and visualize blockchain data, making it an invaluable resource for developers, researchers, and enthusiasts in the blockchain community. The practical examples demonstrate this integration's real-world utility, highlighting its potential to yield insightful and actionable information from complex blockchain datasets. In essence, `cryo` and Python offer an effective and accessible platform for in-depth blockchain data exploration.

### About the author